Robots.txt Plugin Configuration

Installation

The Robots.txt plugin can be added to your project using the Essentials setup application.

Prerequisites:

- Bloomreach Experience Manager project with Essentials setup application.

Instructions:

- Using the Essentials setup application, add Robots.txt to your project.

- Rebuild and restart your project.

- Point your web browser to http://localhost:8080/site/robots.txt to see the robots.txt.

Configuration

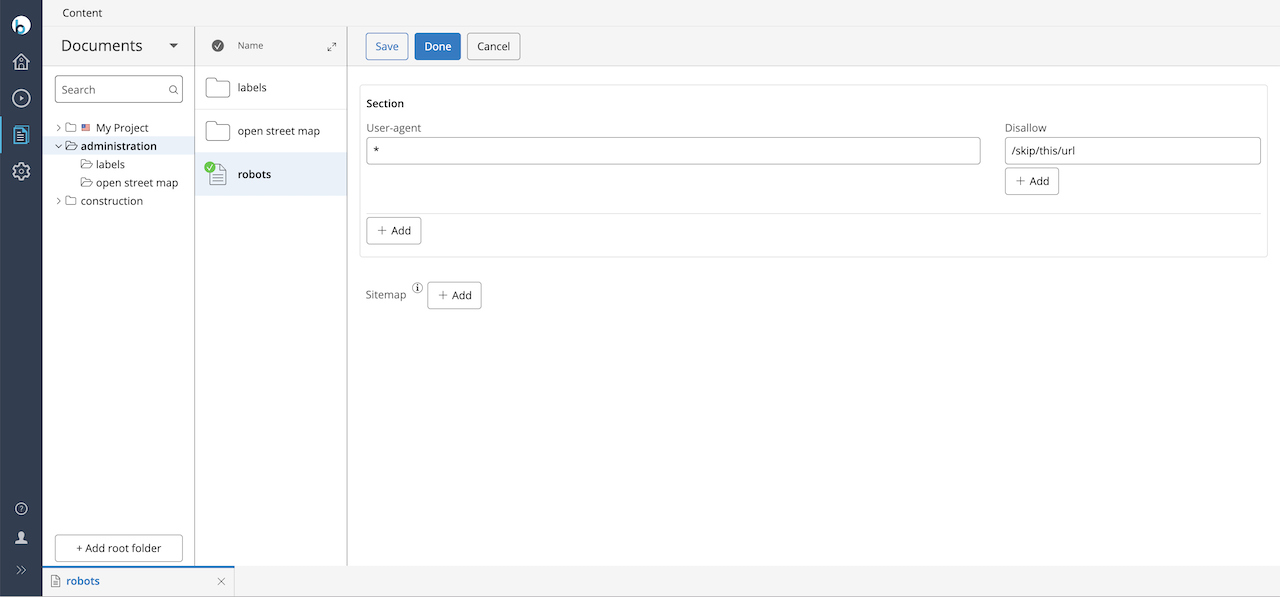

The robots.txt file is configured through a special document in the CMS.

- Open the Content application.

- Browse to the administration folder.

- Edit and publish the robots document.

Multiple Sections are supported, each section representing a User-agent: line followed by zero or more Disallow: lines.

The shown configuration has the effect that for the User-agent called Googlebot, all URLs under [your.site]/abc and [your.site]/def are disallowed. In addition, for all User-agents, all URLs under [your.site]/skip/this/url are disallowed.

On top of this, the robots.txt plugin automatically adds all faceted navigation URLs as disallowed. This behavior can only be overridden on the CMS Console, by adding the Boolean property robotstxt:disallowfacnav with value false to the Robots.txt configuration document.

Default Delivery Tier Configuration

The setup application adds all the required delivery tier configuration. This configuration will work for typical projects. If needed, theconfiguration can be adapted to the requirements of your project.

A component configuration and a Freemarker template are added to the hst:default configuration:

/hst:hst/hst:configurations/hst:default/hst:pages/robotstxt: jcr:primaryType: hst:component hst:componentclassname: org.onehippo.forge.robotstxt.components.RobotstxtComponent hst:template: robotstxt.ftl

/hst:hst/hst:configurations/hst:default/hst:templates/robotstxt.ftl: jcr:primaryType: hst:template hst:script: (freemarker template source code)

A sitemap item (i.e. URL) is added to your project's configuration (e.g. myproject):

/hst:hst/hst:configurations/myproject/hst:sitemap/robots.txt: jcr:primaryType: hst:sitemapitem hst:componentconfigurationid: hst:pages/robotstxt hst:relativecontentpath: ../administration/robots

Configure Different Robots.txt Per Mount

It's possible to use a different robots.txt per mount by setting the robotstxt:path property to the path to the robots.txt document relative to the mount's content root. For example:

/hst:myproject/hst:hosts/staging/com/example/staging/hst:root: jcr:primaryType: hst:mount hst:homepage: root hst:mountpoint: /hst:myproject/hst:sites/myproject robotstxt:path: ../administration/robots-noindex

Example

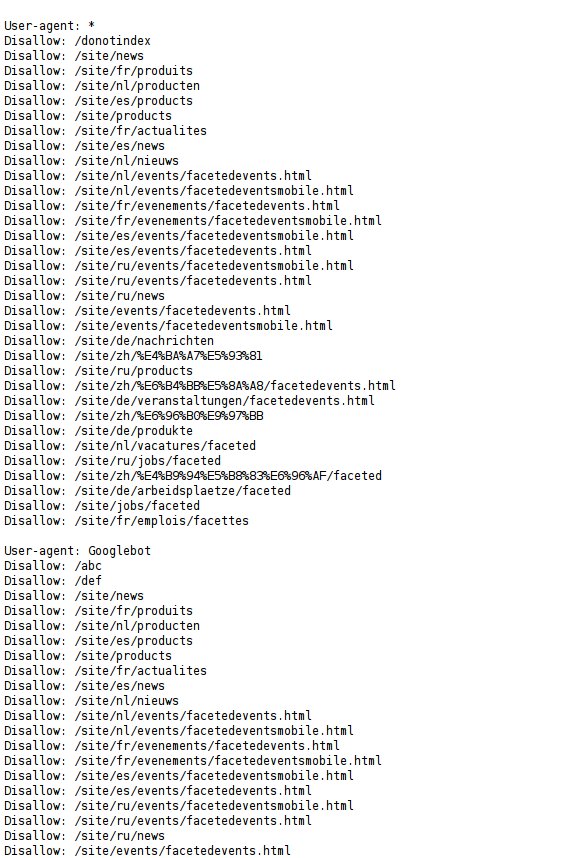

The screen shot below shows sample output for the Hippo demo website, which has quite a few faceted navigation links. Note how they show up after the section-specific paths for all sections.