Robots.txt Plugin

Introduction

Functionality

The Robots.txt plugin adds a special document type to Bloomreach Experience Manager, allowing webmasters to manage the contents of the robots.txt file retrieved by webbots. See Robots.txt Specifications for more information on the format and purpose of that file.

The plugin provides Beans and Components for retrieving the robots.txt-related data from the content repository, and a sample Freemarker template for rendering that data as a robots.txt file.

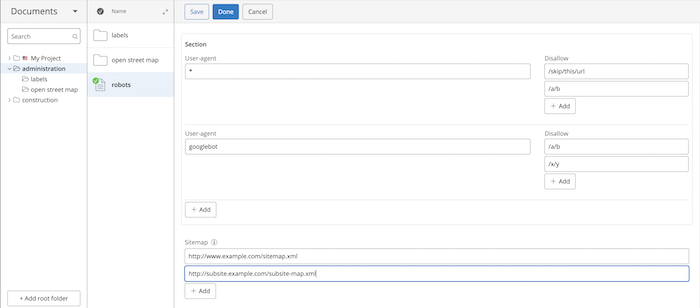

Below is a screenshot of the CMS document editor, where the content of the robots.txt file(s) can be entered / modified.

The screenshot above results in the following robots.txt in the site:

User-agent: * Disallow: /skip/this/url Disallow: /a/b User-agent: googlebot Disallow: /a/b Disallow: /x/y Sitemap: http://www.example.com/sitemap.xml Sitemap: http://subsite.example.com/subsite-map.xml

By default, the Robots.txt plugin disallows all URLs pertaining to a preview-site, if such a site should be exposed publicly, such that search engines do not index preview sites.

Source Code

https://github.com/bloomreach/brxm/tree/brxm-14.7.3/robotstxt